In the last post I explained the basics of using the Trove API by importing the results into the browser window. A more powerful way to use the results returned from the API is to import the results into an Excel spreadsheet. Excel has its limitations but it is one way that people who don’t have any programming skills can store and analyse results gained through the API.

There are two methods of importing the data into Excel:

- In a blank workbook in Excel go to the Data tab. Click on the ‘From Web’ button in the ‘Get External Data’ section of the ribbon on the left hand side. Copy and paste your API call into the ‘Address’ window and click ‘Go’. You will see the same results that you saw in your browser window. Click the ‘Import’ button at the bottom of the window. A message will dome up saying “[t]he specified XML source does not refer to a schema…” Click ‘OK’. Specify which cell your table should start from and the data will load into your spreadsheet.

- Import the data via an XML file:

- Place your API call in a browser like we did before. After you have received the results copy the source code from your browser:

i. In Firefox you can display the source code by going to the orange Firefox tab in the top left of your screen, click ‘Web Developer’ then ‘Page Source. Or just click Ctrl+U.

ii. In Chrome you need to click on the four lines next to your address bar in the top left of your browser window. Click Tools/View Source.

- Select all the code, copy it into a Notepad file, save it as a .xml file with UTF-8 encoding. You will find the settings for the encoding next to the save button, the default is ANSI so you will need to remember to change it.

- Open an Excel file. In the Data tab click on the ‘From Other Sources’ button and select ‘From XML Data Import’. A message will come up saying “[t]he specified XML source does not refer to a schema…” Click ‘OK’. Specify which cell your table should start from and the data will load into your window.

The first method is the quickest but the second method is better for dealing with any problems you may encounter.

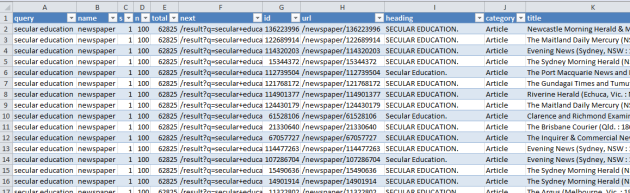

Part of the spreadsheet showing results of a search for the words ‘secular’ and ‘education’ using the Trove API. Click to enlarge.

The spreadsheet you now have is sortable. Using the buttons on the top of each column you can explore the results by filtering out certain data. Microsoft Word’s mail merge facility allows you to export the data into Word for more presentable results. You can also use Voyant Tools to explore the data further.

Record Your Workflow

You need to make your spreadsheet easy to review. I do this by clearly labelling my data and documenting the manipulation of the data that I do in the spreadsheet.

It is a good idea to note the date and time you created the spreadsheet. More newspapers are uploaded to Trove regularly. The results you receive on one day will be different to those you receive on another. The ‘secular’ and ‘education’ search is a good example of this. The search I did a few days ago for my last post returned 62,744 results, yesterday (13/3/2014) I received 62,825 results.

Sometimes you will receive an error message from Excel when you download the articles in this way. You should record these error messages too. A message that says “Some data was imported…” indicates that there may be some fields missing from your spreadsheet. In the work I have done I have found that these are generally fields with no data in them. Generally this error occurs when the ‘Status’ field for every article is empty so Trove does not send ‘Status’ data to you. Likewise there ay be no information about the edition of the newspapers your articles appeared in, so Trove does not return ‘Edition’ data.

The other error message that often arises is “Data has been truncated”. Excel has limits on the number of characters each cell can hold. A download that includes the complete text of articles is likely to give rise to this error, particularly if the search includes articles in the nineteenth century. To ascertain which items are affected, create a column next to the articleText column and calculate the number of characters in the articleText column using the ‘=Len’ function. Sort the column from largest to smallest. If you have any cells with the number 32767 you have found the truncation error. Those articles are too lengthy. You are finding the limits of Excel.

Dealing with Pre-Twentieth Century Dates

Excel does not cater for dates prior to 1900. The consequence of this is that if your spreadsheet has a mixture of nineteenth and twentieth century dates you will not be able to sort it in date order.

The way around this is to convert the dates into numbers and then sort on these. Use the mid, left and right functions to separate the dates into three columns, Year, Month and Day. Then stitch the numbers in the three columns together using the concatenate function to create a number in the yyyymmdd format. Call this column ‘DateSort’ or something similar. Do not include separators such as dashes or slashes to separate the components of the number as Excel may automatically treat the number as a date and cause the same problems you began with. Use the DateSort column to sort the spreadsheet in date order.

API Call Too Long

Another problem you may encounter using Excel is the API call may have too many characters for Excel to handle. Sometimes you can restructure the query to reduce the number of characters. Another way of dealing with this is using a link shortener such as bitly to reduce the size of the URL. However, I have found that this only works in limited circumstances. It is nice when it does work though!

The best approach to deal with this problem is to download the data via an XML file as described in (2) above.

Rogue Data

I was importing an XML file when I received the following error message:

Invalid file reference. The path to the file is invalid, or one or more of the referenced schemas could not be found.

I knew the path was valid as I had successfully downloaded another file from the same directory. This message was too vague to work out what the problem was. In such situations it is a good idea to do the same task using a different method so I went to the developer tab and clicked on the ‘Import’ button on the right hand side of the ribbon. [If you can’t see the developer tab go to File/Options/Customize Ribbon. On the right hand side you will see a list of ‘Main Tabs’. Make sure that ‘Developer’ has been checked (ticked).]

When importing the XML file using the Developer tab I received an error message again, but this error message was much more useful:

Reference to undefined entity ‘tt’.

If all else fails and you really don’t understand what an error message is telling you, do a search of it online. I found this useful article that indicated that “undefined entity” might be referring to those elements in the XML file beginning with ‘&’ an ending in ‘;’. As always there is a good w3schools.com explanation of these elements.

I then searched for ‘&’ and found that most instances were ‘<’. In fact there were a huge number of these elements in my XML file. This is because ‘<’ is the element used to represent the ‘<’ which is used for html tags (see URL Encoding Reference list). I would be sitting there for hours if I was to check each one and I probably would be so bored that I would miss it.

The XML returned by the Trove API does not have any carriage returns or tabs so when you insert it into an html editor it is one massive blob of code all on one line. To isolate the area where the problem was occurring I started inserting the line breaks and indentations. Every now and then I would try to import it into Trove again. I continued doing this until I found that the error was on line 73. There was a lot of text on this line as it included the full text of an article, but I found the gremlin with considerable help from the find facility (Ctrl+F). One of the ‘<’ characters had been incorrectly coded as ‘&tt’. I changed this in my XML file and the import worked perfectly.

I was fortunate that the error was near the beginning of the file. An even better approach would have been to cut the file in half to work out which half the error was in, then cut the file with the error in by half again etc etc.

Check Your Work!

A word of warning: don’t get entranced by the beauty of your spreadsheet. It looks so neat and ordered but errors that affect your analysis can be lurking in those rows and columns. Harvard economists, Carmen Reinhart and Kenneth Rogoff made a mistake that we could all make in an Excel spreadsheet. They failed to include all the data in one column in their formula to calculate an average. This error became very public and embarrassing when a student discovered that the mistake had worked its way into a paper Reinhart and Rogoff wrote. This paper influenced government economic policy in many countries.

Spreadsheets are seductive. The neatness of spreadsheets, the ease with which rows and columns are filled with data, the pretty graphs that we can create: they all seduce us into thinking it must be correct. Carefully test your results by:

- Comparing them with some of the results from Advanced Search in Trove;

- Sorting the columns to find unexpected results;

- Checking that every download has the fields that you want in the order that you want them.

- Reviewing a random sample of articles to see whether it is the type of data you were expecting. This is where you should use your common sense and your background in the topic of the search to question whether the results make sense.

The fact that you don’t receive an error message does not necessarily mean that your results are correct. Always keep in mind that the results for newspaper articles are determined by the accuracy of the OCR. We know from using Trove that there is a lot of text correction still required. You should also keep in mind the possibility that some newspapers that are relevant for your search may not be included in the database or some years may not have been digitised. Missing data can be just as important as the data that you have captured.

Conclusion

Using Excel for your API calls is a good way of getting to know the Trove API better. It is also a good way of doing a preliminary test of your results before committing to more substantial programming work. If you want to use the API to do a substantial amount of data retrieval and analysis you should construct a program to do this.

In my next post I will share some more sophisticated ways of configuring your Trove API calls to refine your search of articles in the newspaper zone of Trove.

References and Further Reading

- Borwein, Jonathon and David H Bailey, ‘The Reinhart-Rogoff error – or how not to Excel at economics‘, The Conversation, 23/4/2013.

- European Spreadsheet Risks Interest Group, ‘Best Practice‘.

- European Spreadsheet Risks Interest Group, ‘EuSpRIG Horror Stories‘.

- Howard, Sharon, ‘Unclean, unclean! What historians can do about sharing our messy research data‘ by Sharon Howard, Early Modern Notes, 18/5/2013

- Perkins, Yvonne, ‘Excel Tips for Digital Humanities‘, some notes for participants at my session on using Excel for cleaning data, THATCamp Sydney, October 2013.

- w3schools.com, URL Encoding Reference

This is part two of a series about the basics of using the Trove API. The other posts are:

I think I might have found an error, but correct me if I am wrong! When you change the s value by 1 (ie s=0, s=1, s=2 etc) and then run the API each time, it only returns one additional ‘new’ item (ie article). So that means of the 100 returned, 99 will be duplicates. I think the correct instruction is s=0, s=101, s=202. What do you think?

Thankyou for making this comment Travis. You are right, the ‘s’ values are not page numbers but the number of the result that you wish to have the download to commence from. Keeping in mind that the first result starts at zero, then the second group of results would have to be s=100, then s=200 etc. If you use s=101 you will miss result number 100 because it falls after s=0 and before s=101.

I have corrected and expanded on the explanation of the ‘s’ values in the previous post, .

It is great that you are working through these posts and are sharing what you have discovered. Have you got a project in mind using the Trove API?

I’ve been having immense fun with Excel VBA since your post as it’s broken down the barriers to explore and led the way to show us how to use Excel to extract into XML in an very easy way. My VBA code is a work in progress, but so far I can loop through each ‘block’ of 100 records without user intervention and insert them into a single worksheet (To help Trove, I’ve inserted a pause which can be adjusted ie 30 seconds).

What I am trying to overcome is to see if I can some way minimise the post-data cleanup once all the data is extracted. I’ve also noticed that the Trove API is inconsistent with the columns it returns. This is really noticeable in the last block of records returned, the “Next” column is not inserted. Other issues are the “Edition” column can make an appearance in one XML table, but not another so again the columns are out of sync.

I’m not sure it’s possible if we can ‘control’ the information returned such as turning off “Next” column. I might have to do a check such as “If Edition column exists, move to last column” so the data is in sync.

Basically my project is simply to enter a search criteria (ie “brighton%20cemetery”) and extract the records depending on the Zone (ie newspaper) and Category (ie Article) selected. All thanks to your excellent blog.

Thankyou Travis, I’m enjoying your comments and they are pushing me to explore this API further. I don’t know how to handle the issue you have identified. It is a fundamental problem that anyone using the API is going to encounter. Hopefully others who read this may be able to contribute a solution.

I’m currently exploring a virtual rabbit warren of different paths to find a workable solution to minimise the post cleaning of data and ensuring the columns are all in sync. I’m sure we can also automate your manual solution of tidying up the pre-1900 and post-1900 date issue as well so the user has the ability to sort the date column.

Other ideas is to introduce date range ie 1910 to 1920 (I have already managed to add the Category as an option without breaking the API query) and Newspaper title. Would love to know if there’s a way to restrict the newspaper by State, because a query like “cheltenham cemetery” will include UK, SA and Vic.

Not sure if my message was recorded on the Trove forum, but I found another facet error from the one you picked up – “Family notice” should be “Family Notice” http://trove.nla.gov.au/general/api-technical under 5.1 List of supported facets. I think “Details lists” is also suss.

We are working on similar issues at about the same time! I’m just about to publish a post that will answer your question about Family Notices and ‘Details lists’. Give me a few minutes to tidy it up and those questions will be answered.

The newspaper zone of Trove only includes Australian newspapers so there are no UK newspapers in there. You can restrict your search by state (I’ll cover that some other day). However, this will not prevent your Victorian results from including articles about the SA cemetery. I suggest that you try using ‘NOT’ to exclude results with Adelaide in them to address this issue.

Thank you for this post — it’s made my first venture into exporting results from the API so much easier!!

I’m glad that it helped you. That is exactly why I wrote it and the other posts on this site. Thanks for the feedback!